How I built A Redis LRU Cache With Python & AWS

Published: April 15, 2025

I recently wanted to optimize the latency of calls being made to an API I made. The API updates the prices of orders placed on a storefront that sells things like hoodies and art work. I update the order price based on some rules such as where the order originates from and what category the order falls under. These rules are stored in a postgres db for fast OLTP workloads but I wanted to make it faster.

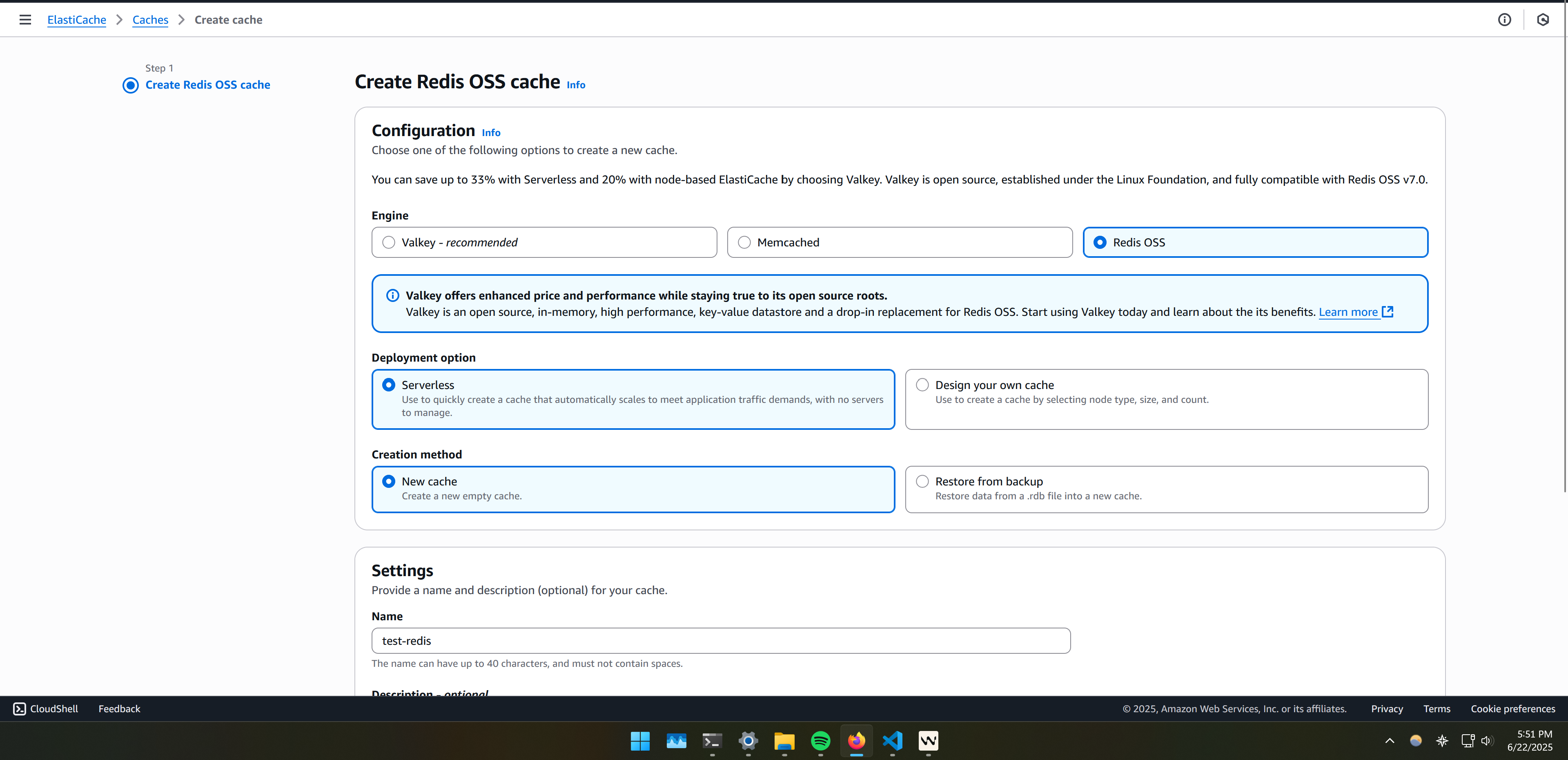

I decided on using AWS Elasticache with Redis as a caching layer on top of my postgres db. A caching layer would really improve the speed of my api calls since I'd no longer have to access postgres for rule lookups. Specifically, a LRU cache would be the most efficient for my use case.

The first thing I saw when getting Redis Elasticache set up was the AWS Elasticache dashboard.

I did some basic setup here. Most options I want to keep default. I don't need a highly customized setup since my API is not for a F500 company handling 10K requests a second. Though, if needed I could pretty easily customize the setup to handle that thouroughput.